A Beginner’s Reading List for Large Language Models for 2026

In this article, you will learn how to build a beginner-friendly 2026 reading plan for large language models (LLMs), from core concepts to scaling, rearchitecture, and practical applications.

Topics we will cover include:

- Foundational, conceptual, and hands-on resources for understanding LLMs.

- Guides for scaling and re-architecting LLMs to meet practical constraints.

- Selected research and domain-focused readings to broaden your perspective.

Let’s not waste any more time.

A Beginner’s Reading List for Large Language Models for 2026

Image by Editor

Introduction

The large language models (LLMs) hype wave shows no sign of fading anytime soon: after all, LLMs keep reinventing themselves at a rapid pace and transforming the industry as a whole. It is no surprise that more and more people unfamiliar with LLMs’ inner workings want to start learning about them, and what could be better for setting off on a learning journey than a reading list?

This article brings together a list of relevant readings to put on your radar if you are beginning in the world of LLMs. Placing special focus on the basics and foundations, the list is supplemented by additional readings to help you go the extra mile in important aspects like scaling and re-architecting LLMs. There is also room for research studies and application-oriented, domain-specific readings where LLMs are the protagonists.

The 2026 LLM Reading List You Were Waiting For

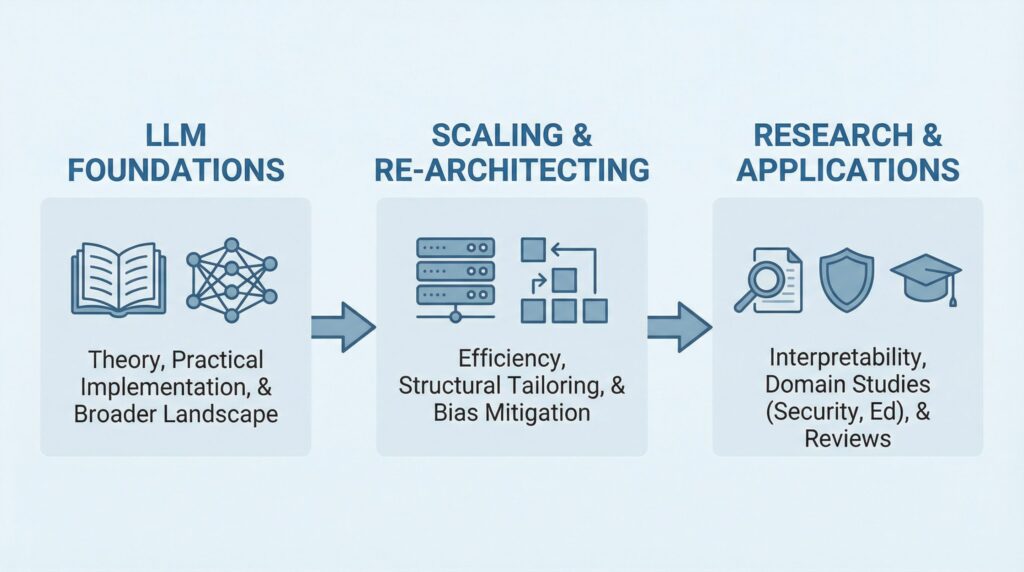

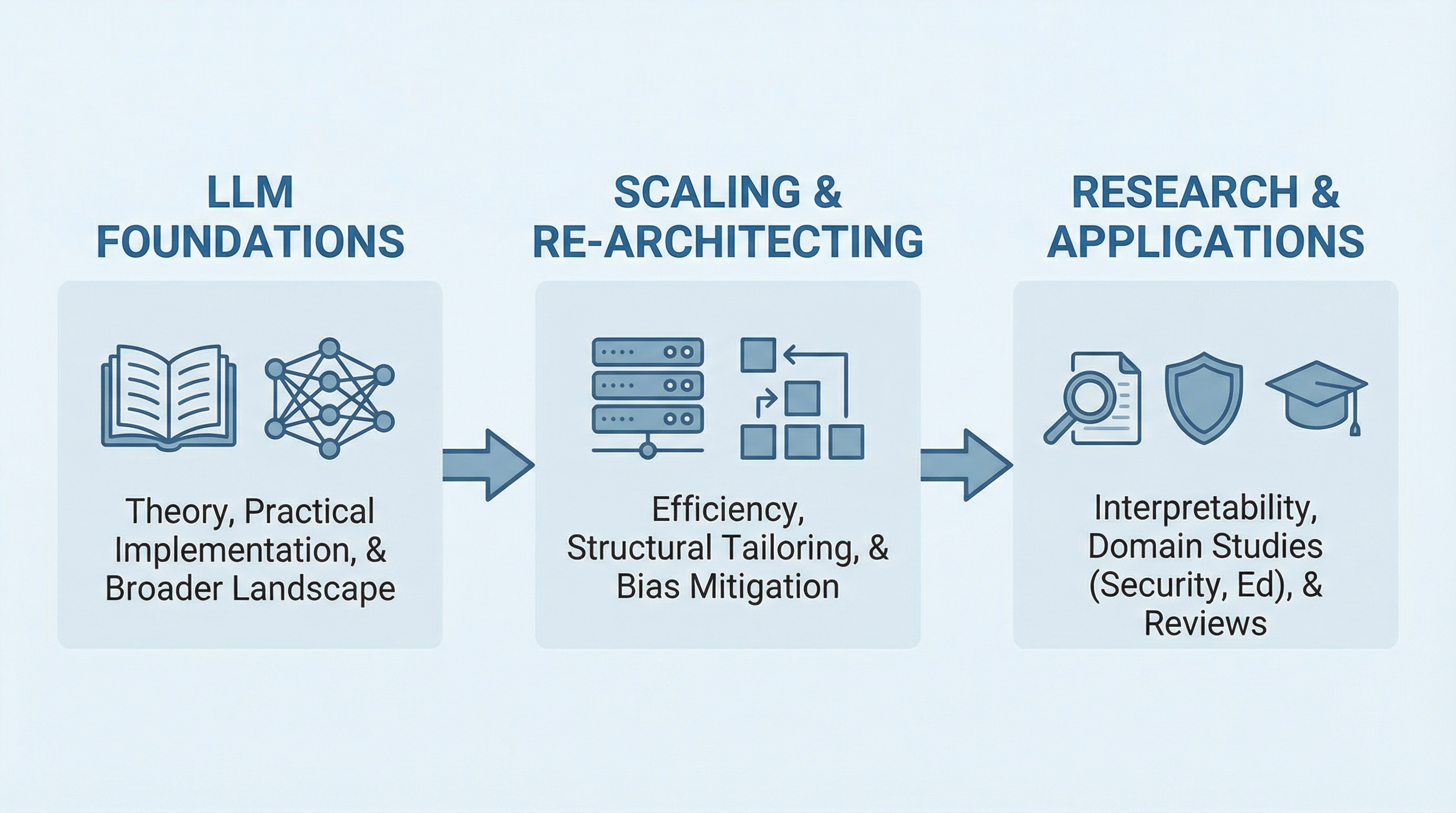

Below is the reading list we curated for you, divided into three blocks.

For beginners in LLMs, the natural starting point is to focus on the first block — that is, reading and acquiring the foundations of LLMs. Once you are familiar with these, depending on your preferences and needs, continue to either one of the other two blocks to deepen your knowledge.

- Conceptual and Practical LLM Foundations

- Scaling and Re-architecting LLMs

- Salient Research Studies and Application-Oriented Texts

Conceptual and Practical LLM Foundations

Do not miss these three comprehensive and open resources to acquire a very solid foundation on LLMs. They complement each other well, as you will notice!

One of the latest and most accessible resources to learn the foundations of LLMs end to end is Tong Xiao and Jingbo Zhu’s eBook “Foundations of Large Language Models“, currently available at arXiv. The book is divided into five pillars associated with core concepts: pre-training, generative models, prompting, alignment, and inference. Recommended for: profound theoretical understanding.

Pere Martra’s repository — and, in particular, his “Large Language Model Notebooks” course — is an excellent and very comprehensive, beginner-friendly resource to gain practical knowledge of LLM use and implementation. Author of the acclaimed book “Large Language Models Projects“, his repository provides plenty of constantly up-to-date lessons enriched with hands-on examples and Python notebooks. Recommended for: practical, hands-on learning.

Another reading resource worth watching, due to being regularly updated, is the freely available ebook release of Dan Jurafsky and James H. Martin’s “Speech and Language Processing” book. Their work adopts a broader perspective, taking a look not only at LLMs but also at other related and preceding types of models across the deep learning landscape. Both PDF and slideshow presentations are available for download, so you can learn using your favorite format. Recommended for: a wide look into part of the current AI landscape surrounding LLMs.

Scaling and Re-architecting LLMs

Once familiar with the foundations, two notable LLM trends that are gaining increasing importance relate to building and maintaining models that are scalable, as well as re-architecting existing LLMs to adapt them to specific needs or navigate challenges or limitations.

For scalability in LLMs, “How to Scale Your Model“, developed by Google DeepMind scientists, is a valuable reading resource touching on diverse practical aspects like TPUs, sharded matrices, the math behind transformers, and many more.

Regarding re-architecting LLMs — a critical topic that still has not gained sufficient attention in terms of available reading resources — it is worth mentioning Pere Martra’s extensive educational work on LLMs again, this time focusing on the latest, soon-to-be-available release of his new book “Rearchitecting LLMs: structural techniques for efficient models“. At the time of writing, you can read the first two chapters at no cost, both of them approachable and self-contained: why does tailoring LLM architectures matter? and an end-to-end architectural tailoring project. Lots of hands-on material accompanies the theoretical notions and insights, of course.

Bias in LLMs, concretely in transformer architectures, is another topic addressed in this read from a practitioner’s perspective, exploring how internal neuron activations can help reveal subtly hidden biases and how to act using strategies like pruning to optimize for bias-resilient, efficient models.

Salient Research Studies and Application-Oriented Texts

Let’s wrap up with some examples of research-oriented studies and application-oriented texts about the use and development of LLMs.

- The text by Jenny Kunz provides a study on understanding LLMs from the viewpoint of interpretability using probing classifiers and self-rationalization.

- This edited Springer book goes deep into LLMs in the field of cybersecurity, highlighting the significance of reshaping the field and defense strategies with LLM-driven applications and navigating related challenges.

- In the sector of education, LLMs have a lot to say, and this study offers a comprehensive review of LLMs in learning environments.

- Last but not least, this read provides an interdisciplinary review of LLMs in a variety of application fields.

Wrapping Up

This article put together a list of recent, relevant readings suitable for absolute beginners who want to familiarize themselves with LLMs in 2026. The list is further expanded with additional reads to go deeper into this growing topic of artificial intelligence.

NOTE: For resources where the number of authors or editors was large, author names have not been explicitly listed in the article for the sake of clarity and brevity.